While certain bots like web crawlers are helpful to businesses, others can impact ad revenue or be used to break security systems.

The existence of bad bots turns bot traffic detection into an essential task. In this guide, we will explore different types of bots, find out how to prevent bot traffic, and not only.

Key Takeaways

Bot traffic includes both helpful bots (like search engine crawlers and monitoring tools) and harmful ones (click bots, spam bots, credential stuffers, DDoS bots).

Bad bots negatively affect analytics, ad budgets, website performance, and user trust by mimicking real behavior, inflating metrics, and performing fraudulent actions.

Common detection signs include abnormal traffic spikes, suspicious IPs or locations, high bounce rates, and unrealistic engagement metrics.

Tools like Ads.txt, Sellers.json, and SupplyChain Object (from IAB) improve transparency and help identify fraudulent actors across the programmatic chain.

Attekmi’s AdTech platforms integrate advanced anti-fraud solutions (Pixalate, WhiteOps, Forensiq) to filter malicious bot traffic and protect revenue, performance, and brand safety.

Anatomy of bot traffic: from definition to types

Bot traffic involves automated software programs, or bots, that mimic human interaction with web content. These bots can be categorized into various types based on their functions, including search engine bots, social media bots, scraper bots, spam bots, click bots, credential stuffing bots, chatbots, DDoS bots, hacking bots, and good bots. Understanding traffic bots software types is crucial for managing and mitigating their impact on websites and online platforms.

What is bot traffic?

So, what does the term website bot traffic stand for exactly? Simply put, it is all non-human traffic, meaning that various activities online are performed by automated computer programs/software.

Even though the words “bot traffic” revive Terminator and Megatron in mind, the non-human traffic doesn’t necessarily have a negative connotation. Ads.txt vs sellers.json are two tools that help in managing and filtering out invalid traffic. Both are important for ensuring transparency and reducing ad fraud, which helps in distinguishing good traffic from bad. In fact, there is plenty of good bot traffic, as search engine crawler bots, and without them, the Internet wouldn’t be as user-friendly as it is today. So, let’s sort out the types of bots and how we can learn bot filtering.

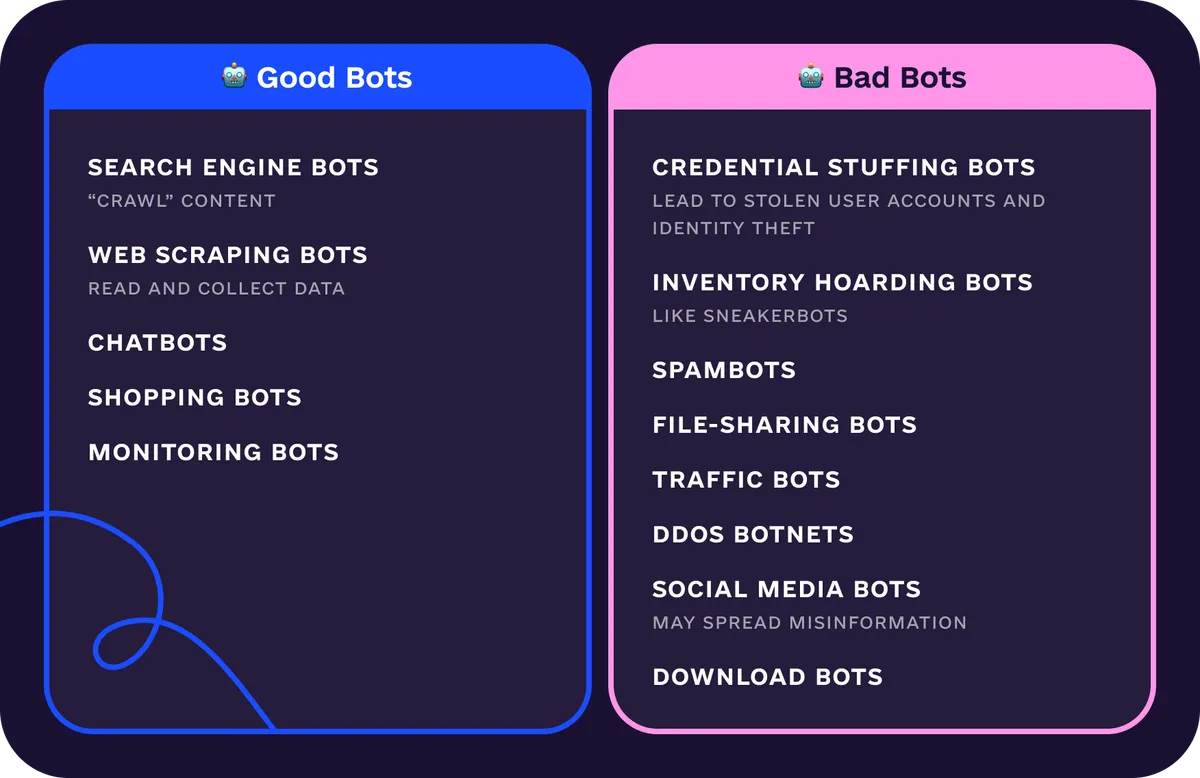

The good bots

Good bots’ task is to collect information about the site on the Internet to make the WWW a better place for all users. The good bots come in the form of SEO/search engine bots (crawlers), monitoring bots, digital assistants, and other useful services that scan websites for copyright compliance and detect questionable activities. All this influences sites’ ranking and eventually affects what will be on the first web pages of your Google search results (or other search engines).

Good bots list:

Search Engine Bots (Crawlers): These bots, like Googlebot, index web pages to facilitate search engine rankings. They follow links, read content, and gather information for search results;

Monitoring Bots: These bots perform legitimate functions such as website health checks, security scans, and performance monitoring to ensure optimal website operation;

Chatbots: Automated chatbots engage users in conversation, providing information or assistance, enhancing user experience when used effectively;

Social Media Bots (Legitimate): Some social media bots automate tasks like scheduling posts or providing customer support on social platforms, improving efficiency;

Data Retrieval Bots: These are used by search engines to gather information and index web content, and they help users find relevant information online.

The bad bots

The malicious bots are the ones that intentionally harm performance for their developers’ profit. Names of bad bots may vary from source to source, be it click fraud or DoS, but you can always learn how to identify bot traffic by intentions:

Intention to mimic real users – these bots are often used in DDoS attacks (distributed denial of service). A group of devices infected with malware connects to a server/network to slow the website’s performance (by bots browsing the site for a long while at an unusually slow rate) or make it unavailable to legitimate users.

Fake traffic bots may also be called imposter bots, as they pretend to be genuine visitors. Impersonating bots accounts for the majority of all bad bot traffic.

Bad bots list:

Spam Bots: These bots flood websites, comment sections, and forums with unsolicited content, advertisements, or links, often for malicious purposes or to manipulate SEO rankings;

Click Bots: Designed to artificially inflate click-through rates on ads or web links, click bots can deceive advertisers and impact ad revenue;

Credential Stuffing Bots: These bots use stolen or leaked login credentials to gain unauthorized access to user accounts, leading to security breaches and fraud;

DDoS Bots: Distributed Denial of Service bots overload websites with traffic, causing downtime and disrupting services, often as part of cyberattacks;

Hacking Bots: Bots used for hacking purposes exploit vulnerabilities, attempt to breach security systems, or perform automated attacks, posing significant security risks.

Consequences of bad bots

These kinds of abusive bot traffic hurt analytics data/reports and influence page views, session duration, and bounce rate, often just on a single page.

New businesses are often tempted to buy at $2/1000 users when starting off to increase credibility in the eyes of real visitors. While the idea is appealing initially, bot traffic affects organic visits in the long run, and the consequences are hard to “rewind.”

Intention to mimic real engagement

These are usually called spam bots (targeting other website URLs). Their task is to post inappropriate comments on social media and website reviews, fill in contact forms with fake information, including phone numbers (aka form-filling bots), write phishing emails with links to fraudulent websites, imitate page views, and so on.

Intention to mimic real clicks

This type of bot traffic results in false analytics metrics and reports, discrepancies, poor organic engagement, a higher bounce rate, and an awkward social media presence.

Intention to mimic real clicks – there are a variety of ways a click fraud can occur (spamming/injection) through malware that will trigger fake ad clicks, for example, in PPC ads (pay-per-click ads). Therefore, these bots click and make PPC ad campaigns hard to run profitably. This is one of the most popular malicious bots.

A subcategory of the action bots is the inventory hoarding bots. These are the ones that aim to spoil the e-commerce stats and performance by putting items in the cart, making them unavailable to legitimate users. These inventory-hoarding bots are not as common as click fraud but should be kept in mind.

Intention to mimic real downloads/installs

Fake bot clicks lead to surprisingly high CTR and obviously low and junk conversions, resulting in wasting advertisers’ budgets. Fake ad clicks also lead to inaccurate analytics and mess with the developer’s A/B testing.

Intention to mimic real downloads/installs – these are the automated systems that perform downloads or installs. Third parties use them as a part of DoS (denial of service) attacks to slow down or halt the performance of an app/website.

However, website owners can also use these bots to embellish the real download/install figures to make the product more appealing to real users (say, in Google Play or App Store).

Intention to steal data/content

These types of bots also lead to faulty statistics and can affect apps’ position in mobile stores.

Intention to steal data/content – there is a variety of things these bots can do. Impersonation or domain spoofing is one of the main tactics when, for example, the malware injects different ads inside the traffic to a website without site owners noticing and then collects the revenue. These bots can also crawl the search results, look for personal data and IP addresses, steal content, use it for parsing (to make fake websites listed in search results better), and mimic human behavior.

Malicious bots have many consequences – from fraudulent traffic in Google slowing down the access of human traffic to a website losing ad revenue.

How can bot traffic affect business?

Bot traffic can have both positive and negative effects on businesses. Negative impacts include financial losses from ad fraud, decreased credibility from spam or malicious bots, security risks like hacking attempts, resource drain from DDoS attacks, and inaccurate website analytics.

On the positive side, good bots improve efficiency, enhance user experience, and assist with data retrieval. To mitigate the negative effects, businesses should implement bot detection techniques and prevention measures while leveraging the benefits of good bots to enhance their operations.

How to detect bot traffic?

Now that we have identified the types of bots and realized their potential and practical danger, let’s learn the best ways to detect and block bots. Not by using search engines, of course. So, what is bot detection? Knowing the enemy is step one. Step two: identify bot traffic.

You would have to dive into your ad analytics to get a full view of the potential danger. All major deviations and suspicious bot activity in analytical reports should be examined. What is bot activity? According to Google Analytics and other sources, here is a small guide on online traffic bot detection:

Sudden and inexplicable increase in analytics metrics (from visits and abnormally high bounce rate to extremely long session duration on a single page);

Abnormally low or abnormally high page views;

Sudden problem with providing traffic to a website, its speed, and performance;

Suspicious site lists, unexpected locations, data centers, and IP addresses (including referral traffic in Google, for example).

There are a number of analytical instruments out there that can help identify and advise for simple Google searches like “how to eliminate bot traffic” from more evident and general like getting an aforementioned Google Analytics account to others, taught not only to detect bot traffic but also to identify whether it is a spam bot, a good bot or a real human user.

How to stop bot traffic on website?

To remove bad bot traffic, publishers can:

Use device identification;

Use CAPTCHA/reCAPTCHA ;

Disallow access from suspicious data centers;

Use Account traffic bot protection;

Using CDN (content delivery network) is a good solution against basic and moderately smart bots, including DDoS attacks;

Install a robots.txt file. It is a kind of roadmap for bots (good and bad) of where they could/should access your site;

Use rate-limiting solutions. These are tools that monitor a number of users on a given website using IP tracking methods. Rate limiting will not stop bot traffic once and for all but monitor and detect sudden spikes in user activity from one IP address.

Essentially, use basic knowledge from your digital marketing experience.

In some systems, like Google Analytics, for example, web traffic coming from known bots and spiders is automatically excluded. Now, not all systems provide a wall of obstacles for bad bot traffic. As we will learn in the following sections, not all bot traffic can be fought off by captcha or Google Analytics filters. Automated traffic bots are getting smarter by the day and are here not only to mess up our bounce rate and page views.

It’s also pretty easy to detect bot traffic if you have your own ad network.

3 challenges in bot detection

Bot traffic detection is a crucial task to perform, but it may be associated with the following challenges.

Imitation of human behavior

The more advanced the bots get, the better they become in mimicking real human behavior. Detecting such traffic may require a more thorough behavior and interaction analysis, as well as the use of AI and ML tools.

Bot networks

A bot network (or botnet) is a group of bots operating toward a single goal. It gets created when malware infects a large number of devices and is rather complicated to detect. Analyzing performance patterns and utilizing special botnet detection tools are ways to overcome this challenge.

Continuous evolution

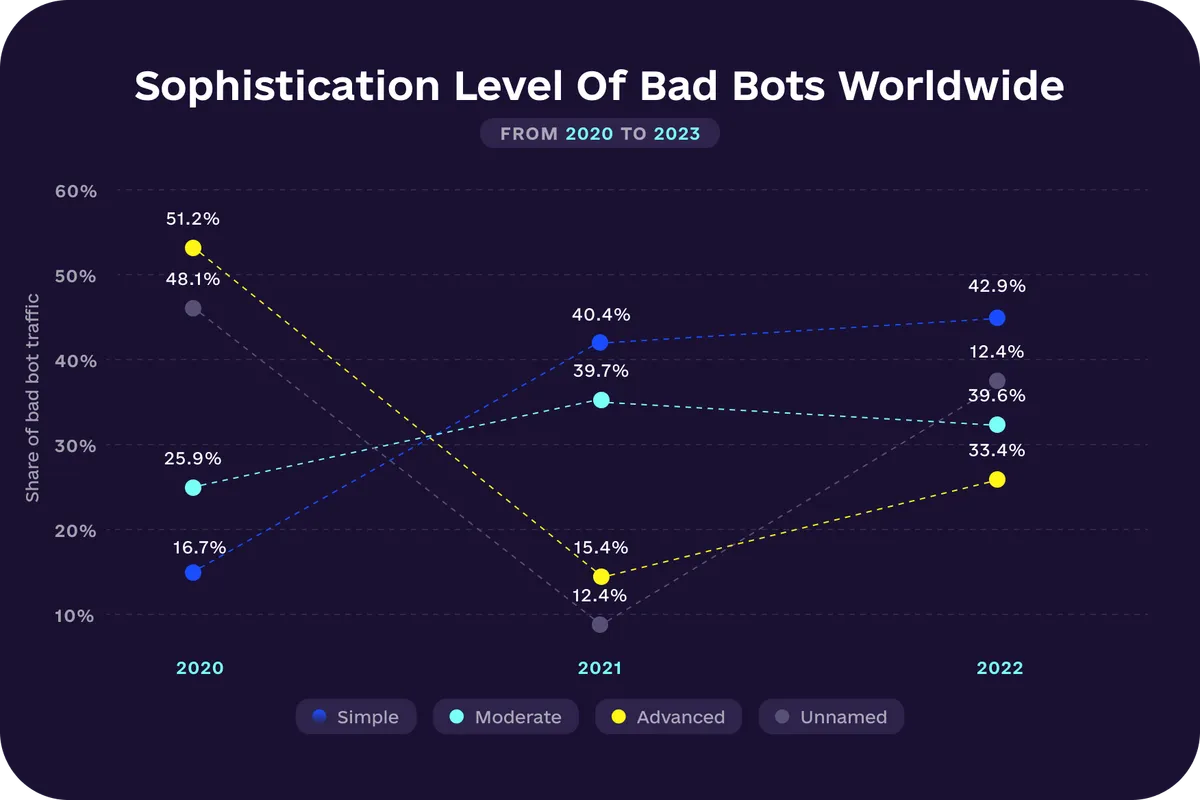

Unfortunately, bots are getting more and more sophisticated, and detection evasion techniques have evolved, too. It’s crucial to keep an eye on their progress to fine-tune detection strategies and minimize abusive bot traffic.

Global bot traffic statistics

Since the birth of the first web bots in June 1993, whose sole task was to measure the size of the Internet, a lot has changed.

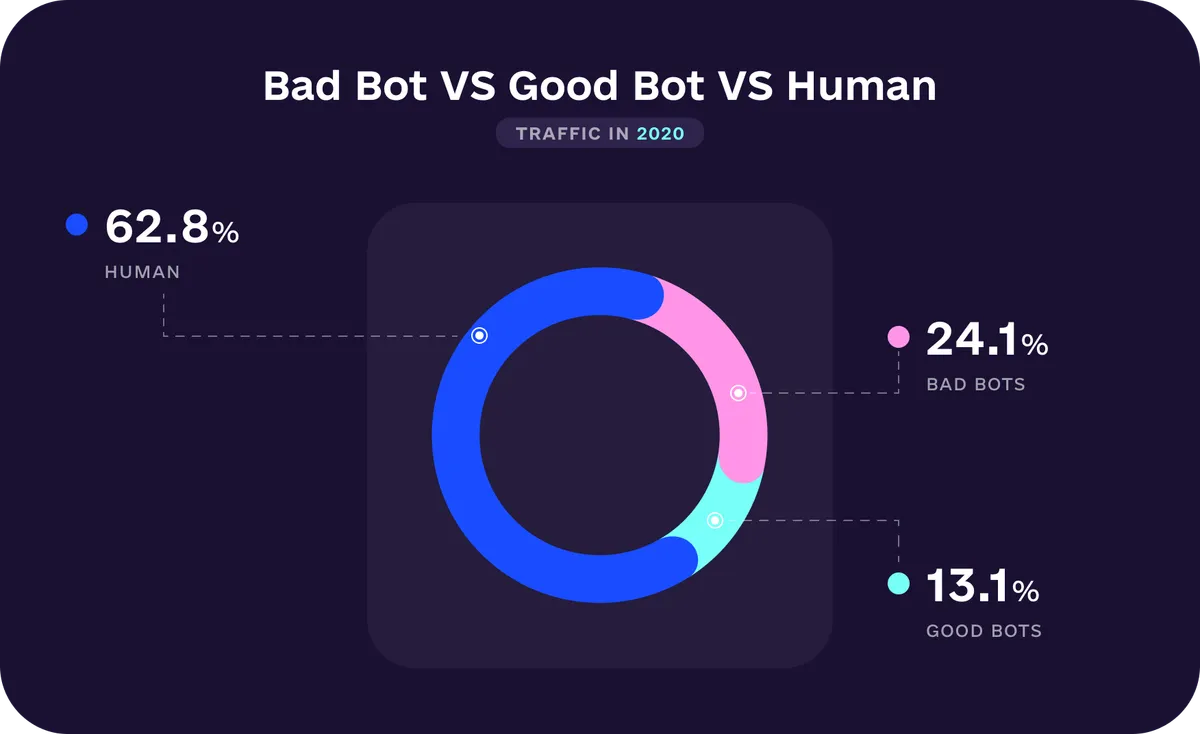

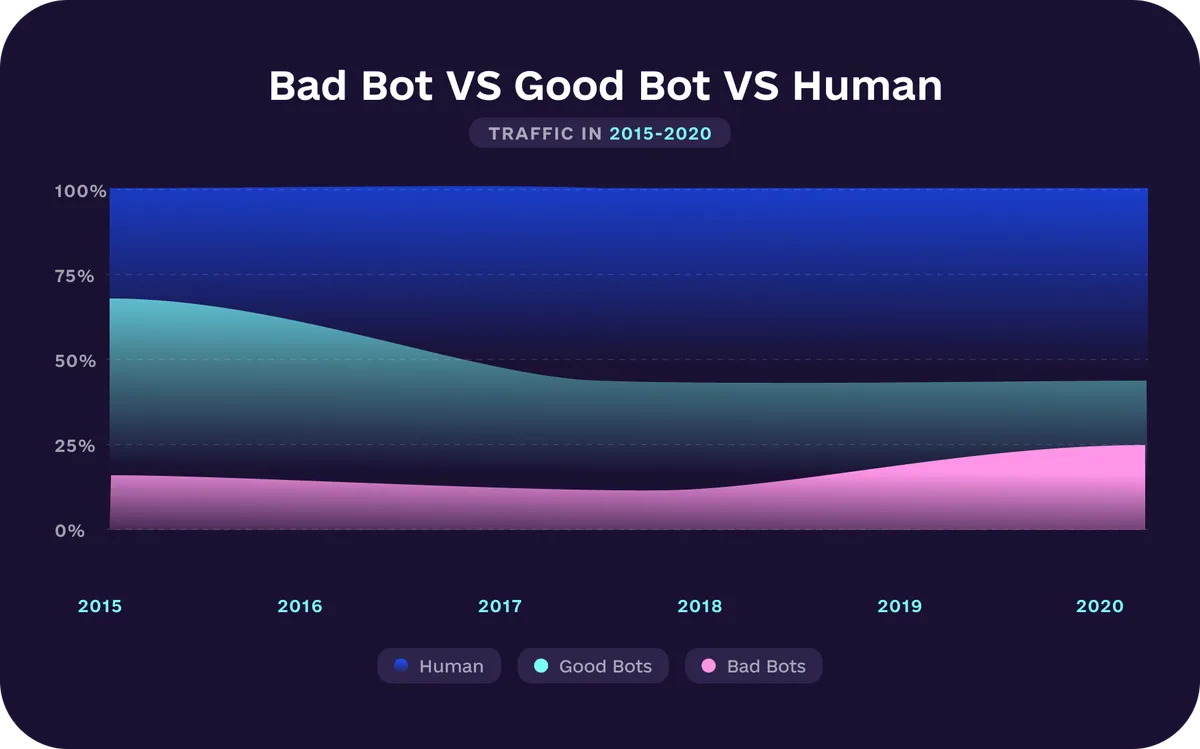

According to Imperva, in 2019, little over 37% of all Internet traffic was bots. 13% were the good bots, and 24% were the bad bots. Even though the total number of non-human traffic has been dropping over the years, their ratio has changed. Back in 2014, a prevailing number of bots were the good ones: crawling Google or other search engines, kindly measuring the “average temperature” across the Internet. Today, the situation has turned upside down.

The amount of malicious bot traffic is slowly declining, and the budget spent on digital advertising is constantly growing. According to eMarketer, the global ad budget for digital advertising equaled roughly $135 billion in 2014; in 2020, it amounted to $378 billion.

With that, $35 billion was spent on ad fraud in 2020. It is estimated by the World Federation of Advertisers that by 2025, the number will reach $50 billion!

When we think about bad bot traffic, we imagine other sites and often think that bots will not be interested in attacking our resources. False. Bots will attack any vulnerable place they can find.

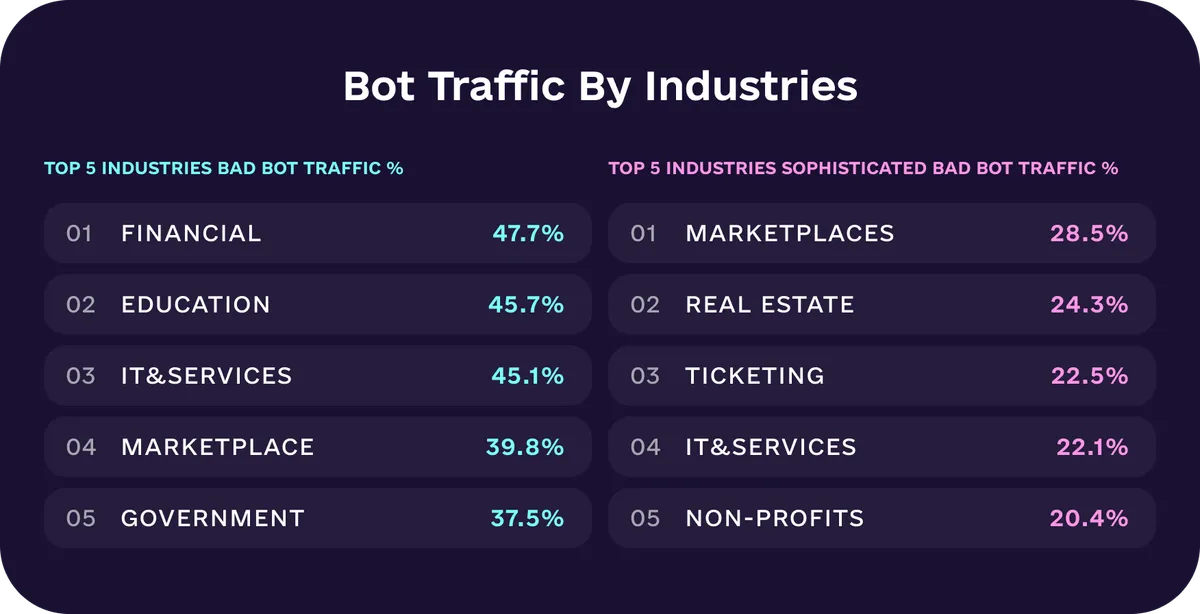

While many businesses have a common website traffic bot problem, some sophisticated bots target particular industries.

Sophisticated programmatic bot traffic, and how Attekmi can help fight it

Now that we have looked at all the general information, it is time to dive deeper into the theme of ad fraud. It is necessary to reveal more complex issues and ways to manage and combat bot traffic.

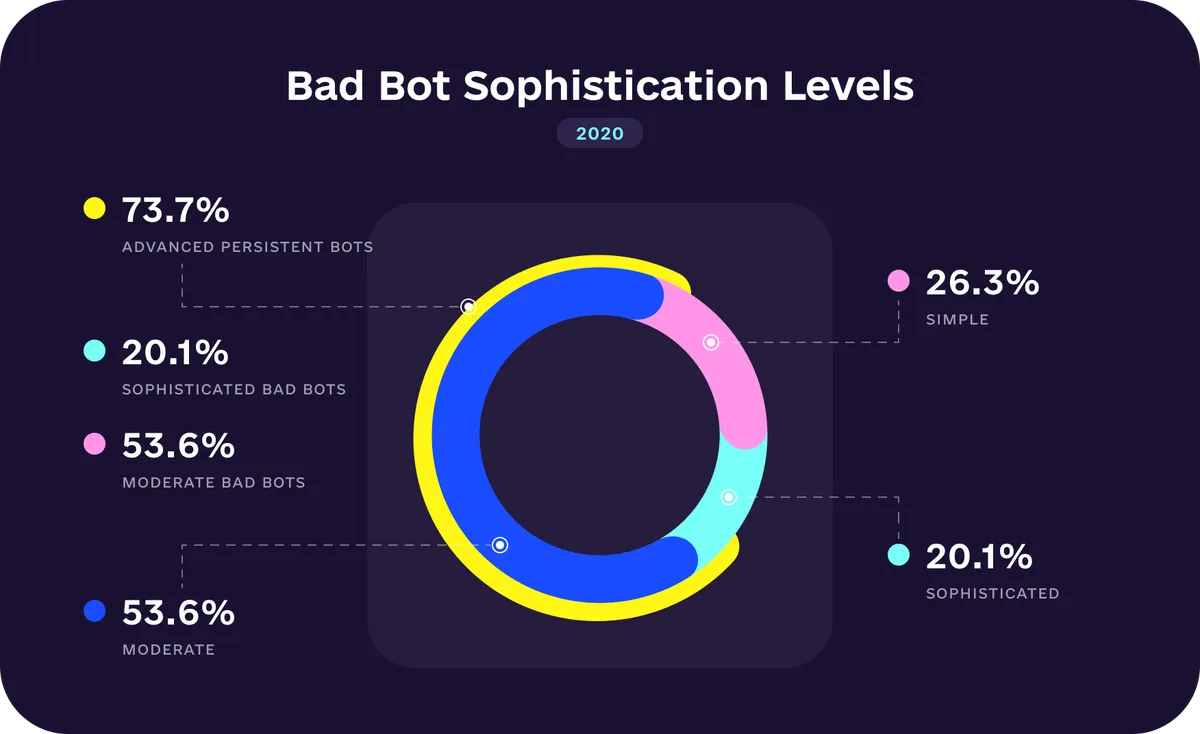

The sophisticated bots

We cannot stop bot traffic using Google Analytics, CAPTCHA, and free traffic bot tools only because not all bots are simple and programmed to perform basic commands and repetitive tasks. Some are, in fact, very sophisticated and able to bypass most anti-bot traffic systems by performing click fraud or fake installs.

Sophisticated bots are a subcategory of bad bots, but they are the worst because they mimic human traffic so well that it is hard to distinguish them, even using special tools. In cinema terms, basic bots are extras on the set, and sophisticated bots demonstrate an Oscar-winning performance. What is vital is to detect bots and learn how to block bot traffic (the bad one, of course).

Source: Statista

Ways to avoid bot traffic, including sophisticated one

1. Ads.txt

An initiative created by IAB was the first step to programmatic transparency. Its main goal was to prevent domain spoofing and unsanctioned sale of inventory by unauthorized companies.

Basically, it is a txt file (not to be confused with a robots.txt file) saved to the main folder of a website containing a list of companies authorized to sell this publisher’s inventory. It is beneficial to advertisers (mainly online advertising networks) and publishers, as the first ones protect their own platform, and the latter can trace any network requests and where the web traffic is coming from at any given moment.

2. Sellers.json

However, when several intermediaries participate in selling limited inventory or inventory in general, the network requests are even harder to follow, and ads.txt is no longer working as a bot traffic management solution. This is when the next IAB initiative comes into play – SELLERS.json – a Javascript file installed to SSPs or AdExchanges. This file also provides all the parties with information about whom they transact with.

3. SupplyChain object

The latest transparency initiative from IAB is the SupplyChain Object. It provides an outlook on the whole supply chain, from seller ID to transactions concluded with them. This way, the buyer gets a complete image of all the players involved and thus can track all the suspicious activities, including unauthorized Internet bot traffic to the traffic source.

Together, these initiatives create a transparent digital ad buying process, providing a list of all the participants of any transaction. So, suppose at any given time, an advertiser notices suspicious bot activity/detecting bot traffic. In that case, they can easily track it down through all the intermediaries and down to the end seller, thus cutting off all the shifty players. Here is some quick advice: work only with digital ad partners who comply with IAB initiatives.

4. Complex anti-fraud tools

When dealing with sophisticated bots, there are a few crucial moments to keep in mind: they react in milliseconds and do it in such ways that it doesn’t scare away real human users and real customers. So, it is vital to find a bot traffic management solution.

But even if you decide to settle on one or a couple of the available bot managing solutions, you will have to test them out beforehand.

We have nothing against the trial-and-error method, but we think it is better to play it safe when it comes to potential serious budget losses and deciding how to stop bot traffic.

With the growing variety of malicious bot traffic, it is not practical to use only one system. It is way more effective to use complex tools with different “fighting techniques” to stop malicious bots, which will improve all the statistics, from search visibility, session duration, and organic website traffic to ad revenue.

Even more convenient — to use a tool with all anti-fraud solutions in one, like those offered by Attekmi.

Attekmi anti-fraud scanners to the rescue

Attekmi ad exchange solutions allow you to enter the programmatic ecosystem promptly. With the user-friendly dashboard, smart optimization, and easy-to-grasp reports, this technology will save you a lot of time (and money) that you would otherwise spend on getting to know a number of other solutions. However, one of the key features is managing all types of traffic bots with traffic safety scanner providers.

So, whether you are an avid user of the technology or just deciding on the tech product for your business, you should know that Attekmi has probably one of the most exhaustive collections of web traffic bot management solutions on the market. They help to pinpoint all the bad bots and other suspicious actions to protect your marketplace. For this purpose, all Attekmi scanners have been created.

Attekmi platforms are equipped with time-tested instruments, like Pixalate, WhiteOps, and Forensiq that offer more innovative approaches to tracking and blocking unwanted Internet bot traffic.

These are some of Attekmi’s traffic filtering procedures:

Mismatched IPs and Bundles throttling;

IFA and IPv4 missing requests throttling;

Secured filter bot traffic;

Adult traffic filtering;

Blocked CRIDs;

Blocked categories.

Find out how else we help businesses in our case studies.

Afterword

Analyzing bot traffic and taking measures to prevent it is crucial not only for advertisers and publishers. Ad exchange owners need to ensure the safety of their marketplaces, and using a solution from Attekmi is the way to simplify this task.

Additionally, it’s important to keep an eye on the evolution of bots and the development of anti-fraud tools. This way, it becomes possible for all the parties involved to adapt bot detection strategies on time.

Want your client’s ads and website traffic bot free? Contact us today!

FAQ

Traffic bots can be legal or illegal, depending on their purpose and use. Legitimate bots, such as Google organic search bot or website monitoring bots, are legal and serve useful functions. However, bots designed for malicious activities like click fraud, spamming, or hacking are illegal and can lead to legal consequences. It’s crucial to distinguish between lawful and unlawful bot activities.

To block traffic bots, consider implementing a web application firewall (WAF), using CAPTCHA tests, employing bot detection tools, configuring robots.txt files, blocking specific IP addresses, analyzing user behavior for bot-like patterns, and implementing rate-limiting measures to restrict excessive requests from a single IP address. These strategies help safeguard websites from unwanted bot traffic.

One may receive bot traffic on the site for various reasons. Legitimate search engine crawlers index your content. Malicious bots engage in spam, click fraud or hacking attempts. Competitors or data aggregators may scrape your site. Some bots probe for security vulnerabilities. Others artificially inflate ad engagement for ad fraud. It’s crucial to differentiate between legitimate and malicious bot traffic and implement measures accordingly.

By Anastasiia Lushyna

By Anastasiia Lushyna